The recent release of GPT-4 marks a significant milestone in the field of artificial intelligence, particularly in natural language processing. In this article, we offer a thorough analysis of its advanced capabilities and delve into the history and development of Generative Pre-trained Transformers (GPT), and what new capabilities GPT-4 unlocks.

What are Generative Pre-trained Transformers?

Generative Pre-trained Transformers (GPT) are a type of deep learning model used to generate human-like text. Common uses include

- answering questions

- summarizing text

- translating text to other languages

- generating code

- generating blog posts, stories, conversations, and other content types.

There are endless applications for GPT models, and you can even fine-tune them on specific data to create even better results. By using transformers, you will be saving costs on computing, time, and other resources.

Before GPT

The current AI revolution for natural language only became possible with the invention of transformer models, starting with Google’s BERT in 2017. Before this, text generation was performed with other deep learning models, such as recursive neural networks (RNNs) and long short-term memory neural networks (LSTMs). These performed well for outputting single words or short phrases but could not generate realistic longer content.

BERT’s transformer approach was a major breakthrough since it is not a supervised learning technique. That is, it did not require an expensive annotated dataset to train it. BERT was used by Google for interpreting natural language searches, however, it cannot generate text from a prompt.

GPT-1

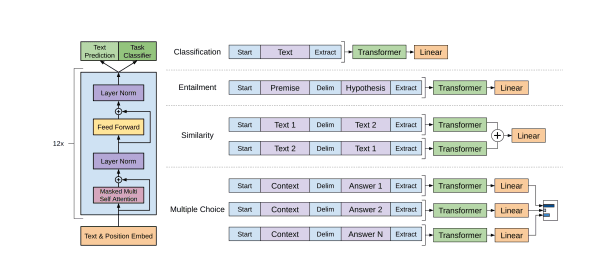

Transformer architecture | GPT-1 Paper

In 2018, OpenAI published a paper (Improving Language Understanding by Generative Pre-Training) about using natural language understanding using their GPT-1 language model. This model was a proof-of-concept and was not released publicly.

GPT-2

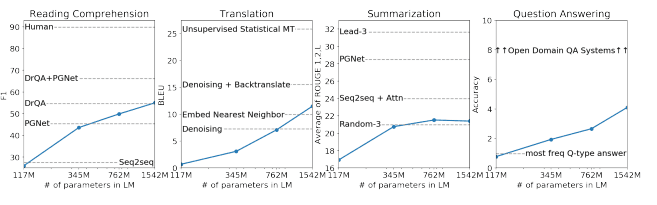

Model performance on various tasks | GPT-2 paper

The following year, OpenAI published another paper (Language Models are Unsupervised Multitask Learners) about their latest model, GPT-2. This time, the model was made available to the machine learning community and found some adoption for text generation tasks. GPT-2 could often generate a couple of sentences before breaking down. This was state-of-the-art in 2019.

GPT-3

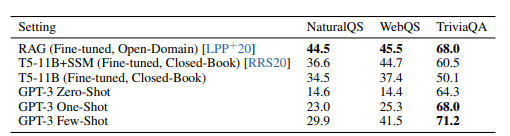

Results on three Open-Domain QA tasks | GPT-3 paper

In 2020, OpenAI published another paper (Language Models are Few-Shot Learners) about their GPT-3 model. The model had 100 times more parameters than GPT-2 and was trained on an even larger text dataset, resulting in better model performance. The model continued to be improved with various iterations known as the GPT-3.5 series, including the conversation-focused ChatGPT.

This version took the world by storm after surprising the world with its ability to generate pages of human-like text. ChatGPT became the fastest-growing web application ever, reaching 100 million users in just two months.

You can learn more about GPT-3, its uses, and how to use it in a separate article.

What’s New in GPT-4?

GPT-4 has been developed to improve model “alignment” – the ability to follow user intentions while also making it more truthful and generating less offensive or dangerous output.

Performance improvements

As you might expect, GPT-4 improves on GPT-3.5 models regarding the factual correctness of answers. The number of “hallucinations,” where the model makes factual or reasoning errors, is lower, with GPT-4 scoring 40% higher than GPT-3.5 on OpenAI’s internal factual performance benchmark.

It also improves “steerability,” which is the ability to change its behavior according to user requests. For example, you can command it to write in a different style or tone or voice. Try starting prompts with “You are a garrulous data expert” or “You are a terse data expert” and have it explain a data science concept to you. You can read more about designing great prompts for GPT models here.

A further improvement is in the model’s adherence to guardrails. If you ask it to do something illegal or unsavory, it is better at refusing the request.

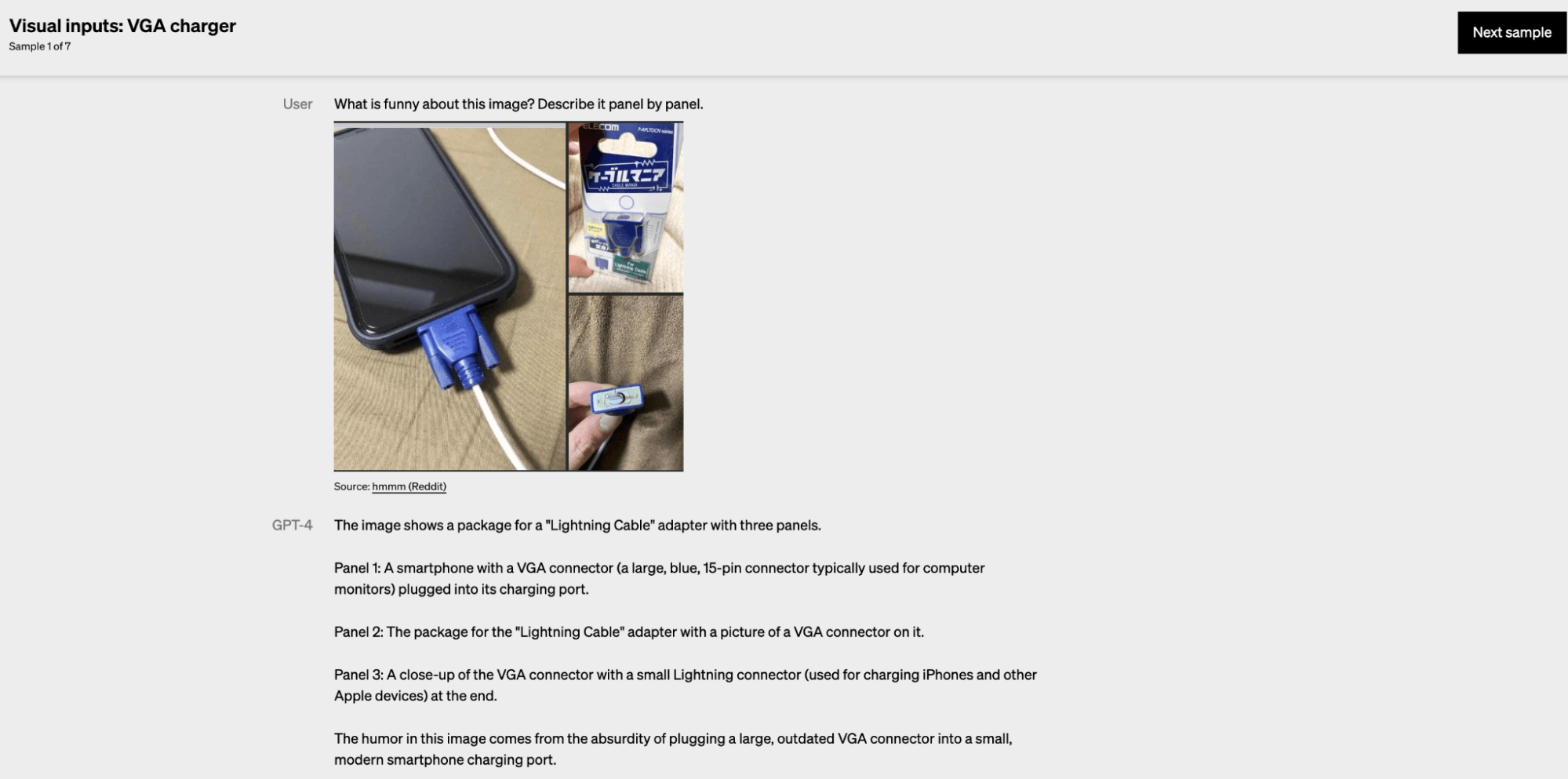

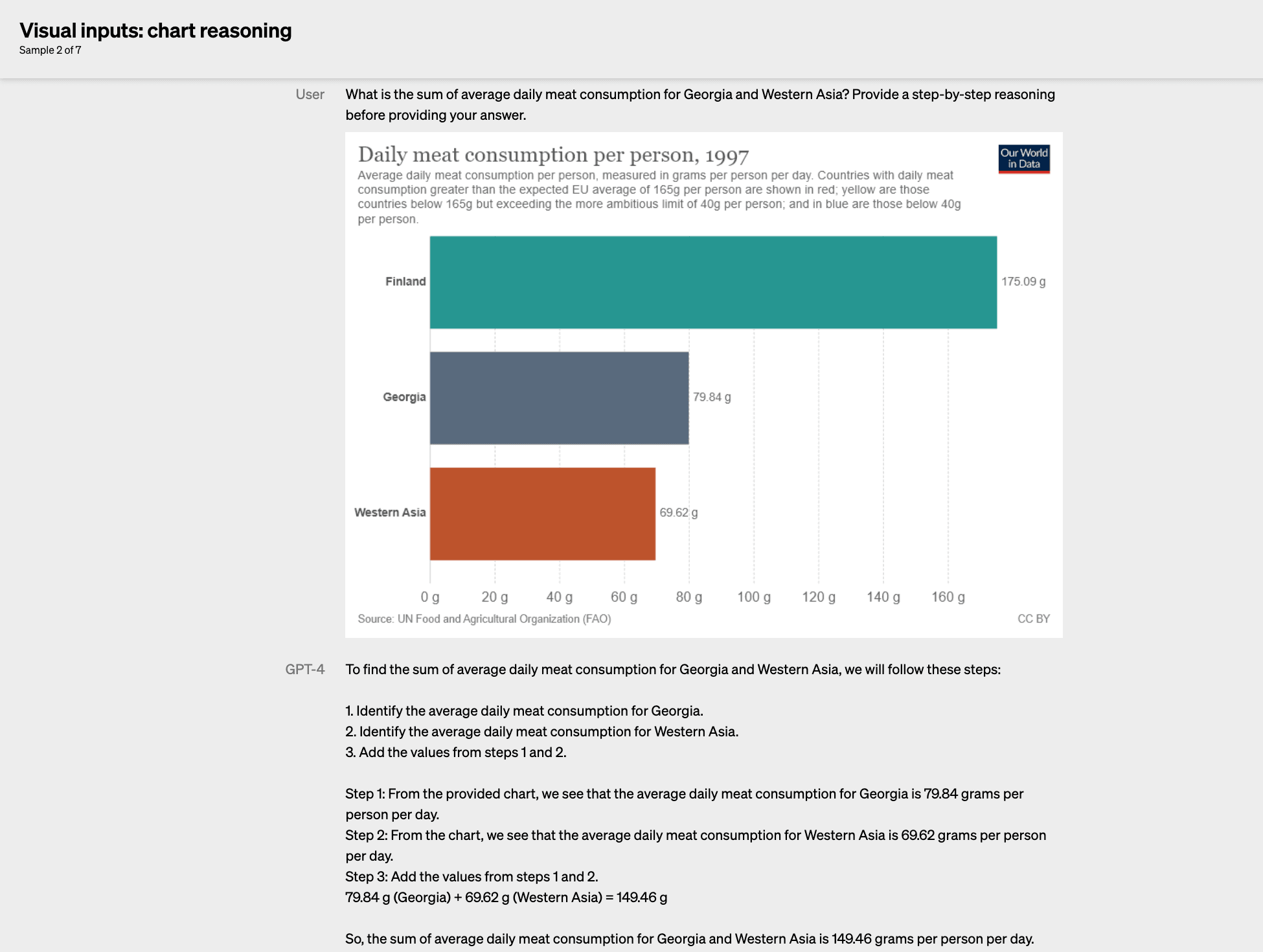

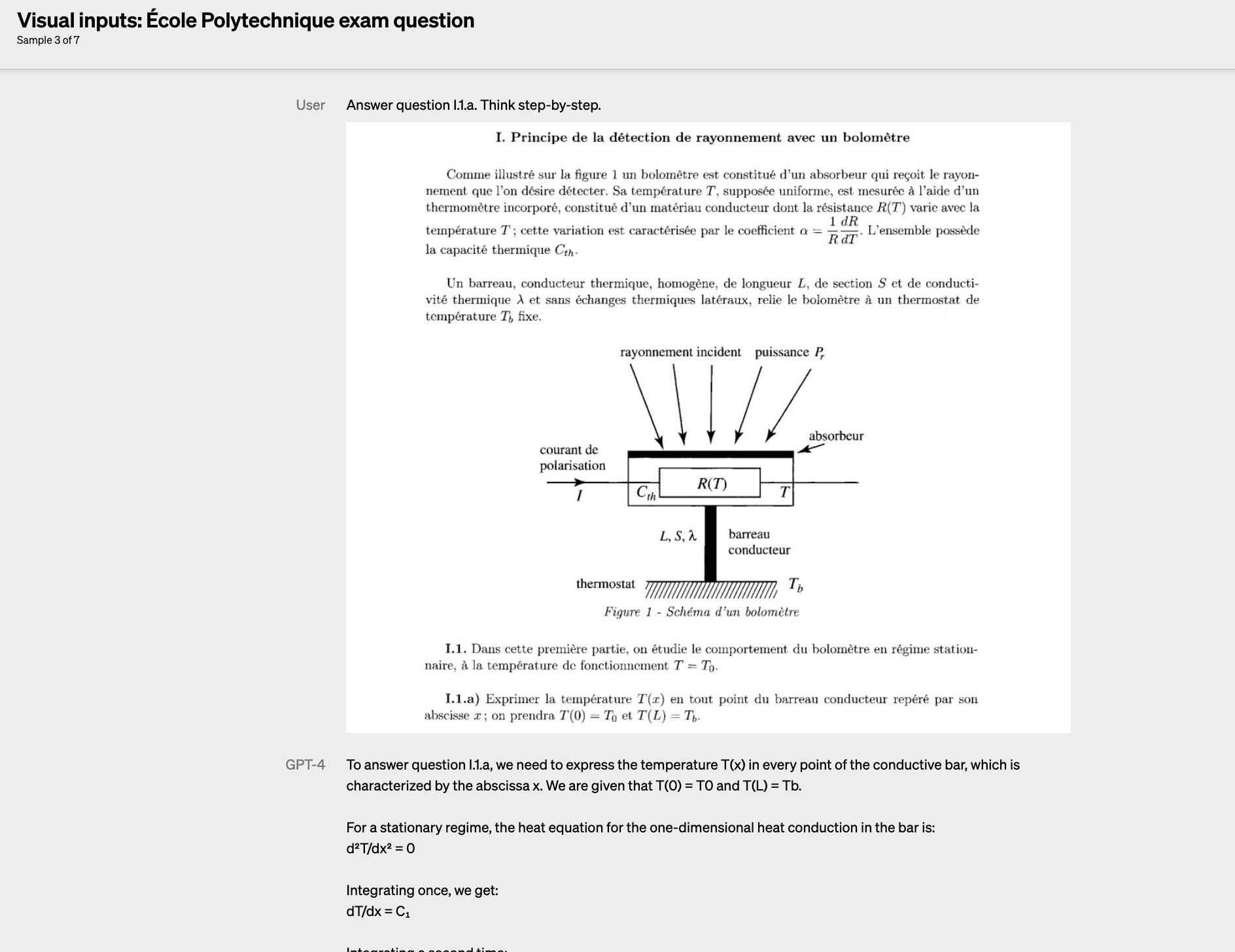

Using Visual Inputs in GPT-4

One major change is that GPT-4 can use image inputs (research preview only; not yet available to the public) and text. Users can specify any vision or language task by entering interspersed text and images.

Examples showcased highlight GPT-4 correctly interpreting complex imagery such as charts, memes, and screenshots from academic papers.

You can see examples of the vision input below.

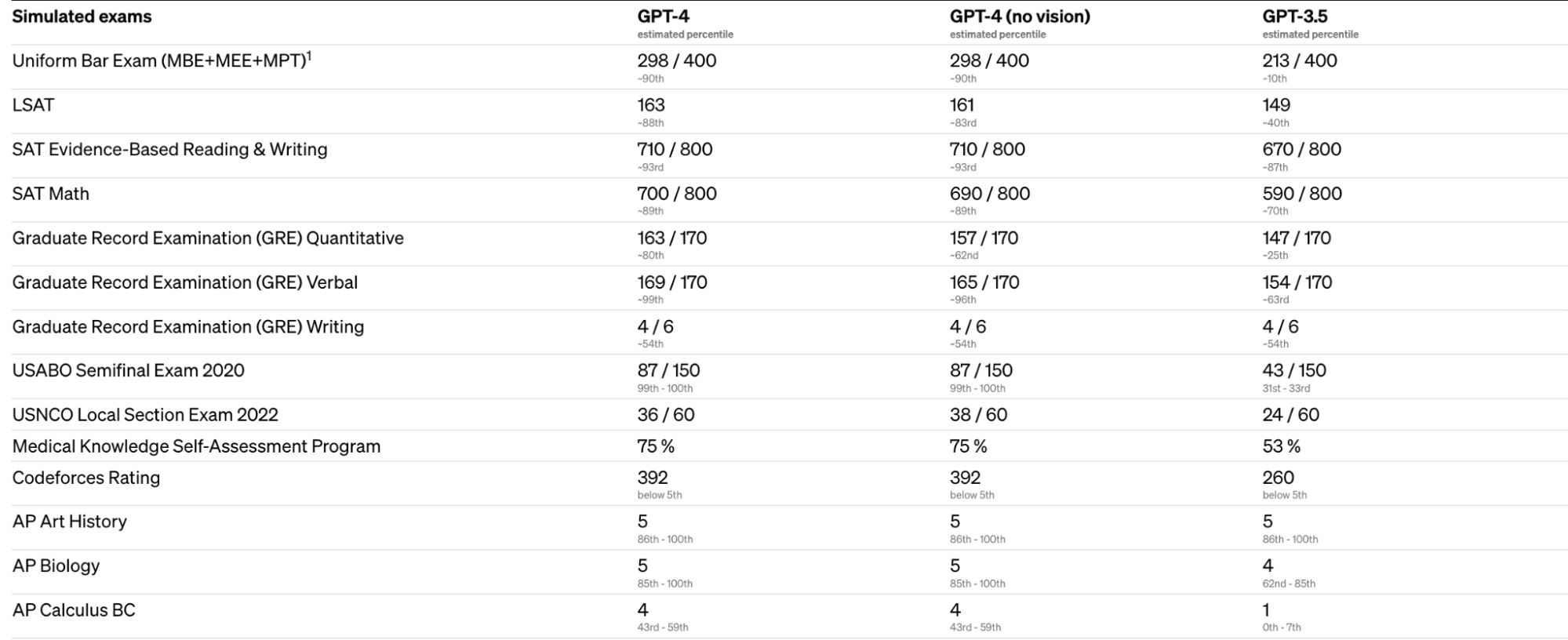

GPT-4 Performance Benchmarks

OpenAI evaluated GPT-4 by simulating exams designed for humans, such as the Uniform Bar Examination and LSAT for lawyers, and the SAT for university admission. The results showed that GPT-4 achieved human-level performance on various professional and academic benchmarks.

OpenAI also evaluated GPT-4 on traditional benchmarks designed for machine learning models, where it outperformed existing large language models and most state-of-the-art models that may include benchmark-specific crafting or additional training protocols. These benchmarks included multiple-choice questions in 57 subjects, commonsense reasoning around everyday events, grade-school multiple-choice science questions, and more.

OpenAI tested GPT-4’s capability in other languages by translating the MMLU benchmark, a suite of 14,000 multiple-choice problems spanning 57 subjects, into various languages using Azure Translate. In 24 out of 26 languages tested, GPT-4 outperformed the English-language performance of GPT-3.5 and other large language models.

Overall, GPT-4’s more grounded results indicate significant progress in OpenAI’s effort to develop AI models with increasingly advanced capabilities.

How to Gain Access to GPT-4

OpenAI is releasing GPT-4’s text input capability via ChatGPT. It is currently available to ChatGPT Plus users. There is a waitlist for the GPT-4 API.

Public availability of the image input capability has not yet been announced.

OpenAI has open-sourced OpenAI Evals, a framework for automated evaluation of AI model performance, to allow anyone to report shortcomings in their models and guide further improvements.